I have done two small vibecoding projects recently, and after reviewing the code that was generated for both of them I am struck by a single thought... I've never seen (human) code like this!

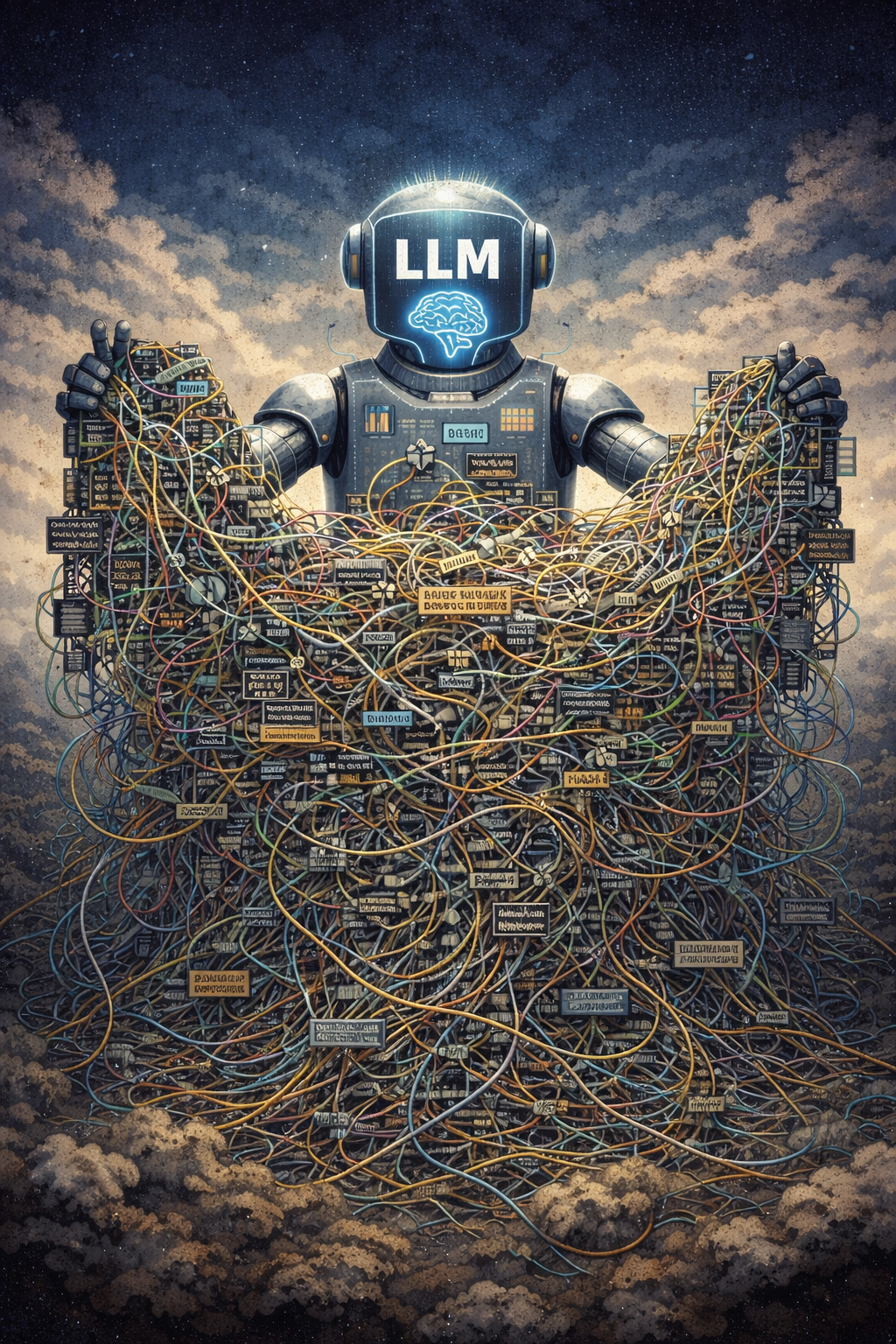

I feel like LLMs tend to generate code that is locally good but has no coherent narrative or larger abstractions. It (mostly) gets the idea of encapsulating things in functions, but I almost feel like it does not even quite understand why it does that. It will create a 1 line functions. It will repeat a pattern 20 times in a file without bothering to make a function out of it. It will inline code that it explicitly wrote a function to handle, seemingly having forgotten that it did so. I have yet to see an LLM do any sort of architecture or design of a system. I have yet to see an LLM generate even a proto DSL of a problem domain. I have yet to see a LLM even recognize the desire to move up the tree of abstraction.

And yet, despite all this. It works sometimes!

Now, my philosophy is that working code beats ideal unrealized code any day of the week. However, there are a few things that make LLMs different than human beings in terms of the code artifacts that they generate.

- They have (almost) no taste.

- They don't seem to climb the abstraction tree.

- They are infinitely patient.

- They don't get bored or tired of repeating themselves.

- They can process a superhuman amount of text.

I'm reminded of a quote by our "Programmer-at-Arms" hero in "A Deepness in the Sky",

"Over thousands of years, the machine memories have been filled with programs that can help. But like Brent says, many of those programs are lies, all of them are buggy, and only the top-level ones are precisely appropriate for our needs."

Not a perfect similarity, but I think this may end up being what software in general looks like if LLMs are allowed to write most of it. It will be literally just.. so... much... stuff. It will be impossible for anyone to make sense of; confined as we are by our puny attention, retention, and tendency to boredom. I am not actually convinced that this LLM generated code will be any better than what a skilled human could have written in external functionality, but it will be much more complex in internal complexity than any human being would be able to deal with. Perhaps, we could someday make an LLM with an internal sense of distate for ugliness, with a desire (pride?) to write artful code, with the capacity for boredom. But as it stands, I don't believe they have any of these capabilities.

Anyway, my only interesting point here is that once you start letting (current generation) LLMs write your code, you are going to end up in a potential situation where only LLMs can make any sense of your code. This sort of makes LLMs a natural monopoly, in the sense that they make code that is so human unparsable that they necessitate using other LLMs to make sense of their own work. LLMs may displace programmers literally because they write code that only another LLM could reason about, not because they are genius, but simply because they are tireless.